More AI Tools Won't Transform Your GTM. AI Infrastructure Will.

Why I stopped thinking in AI tools and started building AI systems

As we head into Thanksgiving, I’ve been reflecting on the work I’m proudest of this year. I built some things I’m excited about: automated account analysis, scaled ad creation, and account matching workflows. And last week, I was honored to receive an internal award for these projects.

But looking back, what I’m most thankful for isn’t launching the individual tools. It’s the deeper shift for me that AI unlocked: learning how to architect, automate, and scale GTM operations in ways I couldn’t have imagined a year ago. I know it might sound cheesy, but I’ve genuinely told several people this week how thankful I am for AI.

So today, the evening before Thanksgiving, I want to unpack what I’ve learned about building the infrastructure that lets AI transform how teams work.

The Gap Between AI Usage and AI Impact

Everyone talks about using AI. We see it in news articles, social posts, hear it from friends and colleagues. But most companies are focused on surface-level, individual productivity tools: Copilot, ChatGPT, pick your LLM. Employees and executives alike use these tools daily, and it creates a sense of progress and improved productivity.

But it’s not transformation.

Leadership, or those unfamiliar with AI workflows, often see AI as email generation, content writing, document summarization. These are helpful, but they don’t materially change GTM efficiency. These organizations think they’re “doing AI” when they’re just adding digital assistants. To get real impact, we need to move from Assisting to Performing.

What Teams Actually Need: AI That Performs Work

So what’s the difference?

An AI that assists with work, like using a chatbot, helps give individual feedback, assists in drafting emails, copy, or marketing assets, or does research on an individual company. This is valuable, but it scales linearly. One person gets a little faster.

An AI that performs work operates at scale. It creates a workflow, not just a response. It can:

Connect directly to CRM data

Perform research across multiple trusted sources (earnings calls, 10Ks)

Map insights to internal messaging and specific use cases

Create and distribute assets automatically

Auto-update systems of record

This is the difference between a chat interface and an engine.

Building an Internal GTM Intelligence Engine

I’ll use the Analysis Dossier as an example, since I’ve written about it before. What makes it different from asking ChatGPT to “research Company X” isn’t the model, it’s the infrastructure I built around it.

At a high level, this infrastructure does four things that a standalone Chatbot cannot:

1. Grounds AI in real source data. The workflow automatically pulls actual text from public financial documents using APIs. We aren’t asking an LLM to “guess” about a company; we are feeding it specific, structured data to analyze. Without this grounding, you get hallucinations. With it, you get analysis citing specific quotes and numbers.

2. Connects insights to internal context. Using semantic search, I built a layer that maps external data to our internal customer stories and value props. The AI connects the dots between “Company X’s Q3 challenges” and “Our Solution Y.”

3. Generates user-ready outputs. The result isn’t a chat response; it’s a finished deliverable (slides, summaries) ready for a client meeting. The user doesn’t prompt, they trigger a workflow and get an asset.

4. Integrates with the stack. Everything writes back to the CRM and document storage. There is no new login to manage.

External Tools vs. Internal Infrastructure

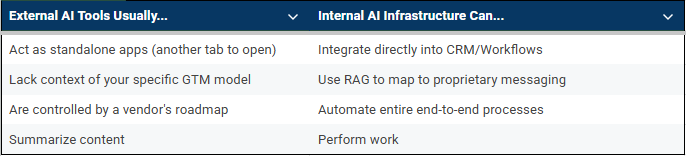

I’m not saying external AI tools are useless - they are often excellent. But there’s typically a difference between what they do and what internal infrastructure can do.

And to be clear, I still buy AI tools. I love tools like Gong or specialized ABM platforms. The distinction isn’t really “Build vs. Buy.” It’s more like Vertical vs. Horizontal.

We BUY Vertical Intelligence. Tools like Gong are incredible at creating deep insights within a specific function (like analyzing sales calls). We should buy these because we can’t replicate their data models.

We BUILD Horizontal Infrastructure. We build the pipes that take that intelligence out of the tool and move it across our stack to where it creates value for other teams.

Think of it this way: You buy the engine, but you build the car. You need the powerful vendor engine, but you need to build the frame that connects that engine to your specific wheels, steering, and passengers to actually get where you’re going.

But who maintains the car, you might ask. I know the common objection to building this type of internal infrastructure: “Who maintains this software when it breaks?”

It’s a valid question, but there is a difference between building software and orchestrating logic.

We aren’t managing servers or writing compiled code. By using enterprise-grade low-code platforms and secure APIs (like Azure OpenAI), we rely on IT to own the platform (security, uptime, governance) while Ops owns the solution (prompts, logic, workflows).

This partnership brings AI usage out of personal ChatGPT windows and into a governed, secure environment that Ops can monitor and maintain.

Why AI Infrastructure Matters for Ops Teams

Unlocking this capability changes what’s possible for Marketing, Sales, and Operations teams. The people who know where data lives, how to structure it, who work with tools every day, and have experience with low-code automation can make their organizations dramatically more agile and impactful.

Teams can create workflows to automate and integrate external reports using AI to analyze campaign reports, email reports, webinar performance. Or create marketing documents at scale. Or automate project management intake processes with conflict detection and resolution. (I’ve already built some of these.)

The Vision: A Unified AI Operational Layer

This infrastructure approach can also unlock the “Holy Grail” of B2B marketing: True Orchestration.

Right now, most GTM stacks are collections of disconnected point solutions. Your MAP sends emails, your ABM platform runs ads, and LinkedIn is its own silo. We envisioned “orchestration,” but usually, we just delivered manual coordination.

But if we start thinking about an AI operational layer, we can change the paradigm. I’m imagining a system where:

Analysis is Unified: We pull signals from tools (Intent, Engagement) to form a single view of the account that is kept up-to-date in CRM.

Messaging is Standardized: The AI takes our core value props and dynamically adapts them for the channel: email vs. display vs. sales script, ensuring consistency.

Execution is Coordinated: The AI layer decides what message goes where based on buying stage. The individual tools (MAP, ABM) become execution engines, not strategy owners.

The building blocks are here. We have the APIs, the automation tools, and the generative models. Although I don’t have all the details figured out yet, it’s something I can see building, piece by piece.

And I’m already doing this now: the Analysis Dossier connects external research to internal messaging. The ABM tool generates persona-specific content at scale. The next step is pushing content through channels based on triggers. We just need time and resources to build (new State of the Art AI model updates don’t hurt either).

GTM Teams Will Be Transformed by AI Systems, Not AI Apps

The future isn’t more tools. We’ve all seen the cycle: eager adoption of a new tool, followed by the fatigue of managing yet another login, admin panel, and reporting dashboard.

I think the answer is deeper, more integrated systems. Users who can check a box in the system they’re already using and trigger powerful workflows that actually work. I’ve seen this in our own adoption of the Analysis Dossier, where usage is high because the tool lives where people already work.

So I’d encourage teams to think beyond AI use cases and start thinking in terms of pipelines, workflows, and systems. Build the connective tissue, not new appendages. The highest leverage comes from infrastructure that makes everything else work better, not from adding more tools to the stack.

Lastly, Happy Thanksgiving to everyone reading. I’d love to hear what AI infrastructure you’re building or thinking about building. Let me know in the comments!

Love this Lily! I feel like we've been saying this for decades now regarding marketing tools & technology: the value is realized from the strategy, alignment to GTM goals, config & practical application/integration of them, not from the capabilities of the tools themselves. Love that you're building that AI-forward culture in your org! I'd just add that for marketers unsure on where to start with that practical application of AI or for teams that don't have a GTM Engineer or mature Ops function, it's always helpful to think outcome first. It could be small or large - like 'we want to reduce the demo no-show rate' or 'we want Sales to have more impactful first calls to improve conversion to Stage X opps' - but that level of focus makes it much easier to build the systems & processes to deliver on that goal and test what works/what doesn't. Then you'll find a level of momentum & excitement internally about 'what else can we improve!' to further inform your AI infrastructure. And by then, they'll be 50 more AI tools on the market to look to for inspo 😁

Couldn't agree more. How do you pitch 'architecting' over 'Copilot'?