More Agents, Worse Results: When Simple Beats Sophisticated

What I learned when my multi-agent system produced worse results than the "simple" approach.

Recent research suggests that 95% of AI pilots fail to reach production. While those numbers might not tell the whole story, the underlying problem is real: AI promises often lead to poor adoption and underwhelming results.

In my intro post, I mentioned building AI automation for account research (the “Analysis Dossier”)—a tool that pulls 10Ks, earnings calls, and maps insights. This tool has now generated over 700 reports and counting. But the path to that success wasn’t just about getting AI to work, it was learning from the failures along the way. This is the story of one of those failures, and how it taught me more about AI workflows that success stories rarely expose.

I was trying to improve a new AI-powered sales tool, we’ll call it the “Strategy Brief”, which takes the account intelligence from the Dossier and builds a more specific business case for strategic, complex accounts.

(I’ll do a breakdown of these tools and how these are built in a future post. Both are built using low-code platforms and use multiple steps to extract information via APIs, Python, and Azure.)

The Problem

Sellers were using the Strategy Brief reports regularly, but I wasn’t satisfied. I reviewed the outputs and thought that they were too generic. The reports felt like a template that just filled in the blanks, like Mad Libs, and not real analysis.

So I started experimenting. I was seeing quality outputs from multi-agent workflows I was using for other use cases, and I thought, “Surely if I build a multi-agent workflow, with each agent passing insights to the next, the results will be better.”

And other AI builders like Justin Norris had seen great results breaking prompts into smaller chunks, so I was convinced this was the solution.

After a day or so of development (a timeline made possible thanks to AI-assisted help), I had a sophisticated 5-agent system, one focusing on each component of the analysis:

Strategic Context Agent

Value Assessment Agent

Stakeholder Psychology Agent

Competitive Positioning Agent

Action Planning Agent

But the output was worse than what I started with. And not just “still generic,” but actually worse. Where the original report had pulled specific details, the new approach produced even more “template” language. Benchmarks with no base numbers, “Millions in savings” with no methodology.

I was genuinely confused. I had built a MORE sophisticated system, with specialized agents. It should be better, right?

Solving the Mad Libs Issue

I’ll back up a little. I work at an enterprise software company, and I was trying to generate strategic intelligence reports for complex, high-value opportunities. These are Fortune 1000 companies, and to win, sellers need executive-ready business cases.

Specific analysis like this (an example): “Based on your $X billion spend disclosed in your 10K and industry benchmarks showing 5-10% contract leakage, we estimate a $Y million exposure. This directly impacts the $X million cost savings target your CEO committed to in last quarter’s earnings call.”

That level of specificity requires:

Parsing of 100+ page financial filings

Extracting strategic priorities from earnings transcripts

Connecting our capabilities to their stated challenges

Calculating ROI using real numbers and benchmarks

Citing sources so users trust the analysis

The original system I built did this fine. But it had that Mad Libs problem. The specific company details felt inserted, not integrated.

The Experiment

I started with breaking down my AI prompts into discrete steps:

Agent 1: Extract strategic scenario and urgency from earnings calls and financial documents

Agent 2: Map buyer persona priorities based on that strategic context

Agent 3: Identify likely objections and mitigation strategies to help sellers

When testing these initially, the outputs worked really well, so I added even more components with 5 specialized agents, each handling one aspect of the analysis, culminating in two final synthesis steps for the detailed report.

The full multi-agent architecture looked like this:

Agent 1: Strategic Context Analysis

Input: 10K filing, earnings transcript, company news

Output: Strategic scenario classification, urgency level, timeline pressure, key evidence

Purpose: Identify what’s really driving their business decisions right now

Agent 2: Value Assessment

Input: Agent 1’s strategic scenario + knowledge base of benchmarks (via Azure)

Output: Top value drivers, industry benchmarks, opportunity sizing

Purpose: Quantify the business case using proven metrics

Agent 3: Stakeholder Psychology

Input: Agent 1’s scenario + Agent 2’s value drivers

Output: Executive buyer persona profiles, hesitations, seller mitigation strategies

Purpose: Map the political landscape and decision-making process

Agent 4: Competitive Intelligence

Input: Previous agents’ insights + competitive data

Output: Win themes, competitive positioning, trap questions

Purpose: Develop strategy to position against likely alternatives

Agent 5: Action Planning

Input: All previous analysis

Output: Execution roadmap, next steps, stakeholder playbook

Purpose: Translate insights into specific actions sellers can take

Then, the next two steps synthesized everything into the comprehensive Strategic Brief report. It made perfect sense—consulting teams work this way, with specialists for finance, strategy, and stakeholders. I thought AI could work in a similar way.

The Confusing Results

I tested the new workflow. Here’s an example of what it produced:

Top 5 Reasons [Company] Should Leverage Our Platform:

Capture cost savings by eliminating revenue leakage

Enforce compliance with AI-driven tracking

Achieve ROI through rapid, incremental deployment

This was…much worse. These were things any software company could do. At least the prior version referenced actual numbers and cited executives by name. These results were pure, generic template language.

I ran a few more test cases—same problem. The more sophisticated workflow produced less sophisticated results.

What Went Wrong? The “Telephone Game” Effect

I spent hours debugging, checking prompts, reviewing agent outputs individually. Each agent’s output looked fine in isolation.

The strategic agent correctly identified cost optimization as a priority.

The value agent correctly found relevant benchmarks.

But as I tracked the data flow, I realized what the final synthesis steps couldn’t see:

The actual earnings call transcript where the CEO said “$X million in cost savings this year.”

The specific spend number from the 10K.

The exact quote about the “board-level mandate.”

I had designed a system where each agent summarized and abstracted, passing a summary to the next agent. By the time the information reached the final steps, all the specific, hard evidence had been stripped away.

It was the “Telephone Game” effect. I was asking the final agent to write a book report using only the Cliff Notes of the Cliff Notes.

Agent 1 reads: “We must deliver $X million in cost savings this year. This is a board-level mandate.”

Agent 1 outputs: “Strategic scenario: COST OPTIMIZATION. Urgency level: HIGH. Key evidence: Cost savings mandate from leadership.”

We just lost the $X million target and the “board-level mandate” quote.

Agent 2 receives that summary. It outputs: “Primary value driver: Procurement optimization. Benchmark: 5-10% spend optimization achievable.”

We just lost the connection to the $X million target.

Agent 3 receives those summaries. It outputs: “Primary decision maker: CFO. Key concern: ROI and cost savings validation.”

This is now generic enough to apply to any CFO at any company.

Each abstraction layer diluted the details, and after 5 layers, the original richness was gone.

A Simpler, Better Fix

I went back and looked at the simpler 3-step analysis I tested in the beginning. It had:

Strategic scenario detection

Persona priority mapping

Stakeholder hesitation identification

And then the final synthesis steps

The difference? All steps had access to all of the original source information.

When the final prompt said to “reference the CEO’s specific cost savings commitment,” it could go find that quote. The architecture was simpler, but the final synthesis step had richer inputs.

I had been so focused on making the agents sophisticated that I’d accidentally starved the synthesis step of the context it needed.

I could try to fix the multi-agent system and give each agent access to all original sources but that would mean:

5 agents × 10-15k context tokens each = 60-90k total tokens (and guaranteed LLM API limits)

Higher cost

Much slower processing

Way more complexity to maintain

Instead, I improved the original 3-step system to add explicit instructions:

Step 1: Strategic Scenario Analysis now explicitly extracts and tags evidence:

- Quote: “[exact text]” (Source: [document, page])

- Financial Target: $[amount] (Source: [filing, section])

- Timeline: “[timeframe]” (Source: [speaker, context])Step 2 & 3: Persona/Stakeholder Mapping receives Step 1 output PLUS all original sources.

Step 4 & 5: Report Synthesis receives all previous outputs PLUS all original sources.

I also added “quality gates” to the final synthesis prompts:

Include minimum 3 calculations showing: (calc: $X × Y% = $Z; Assumptions: [...])

Include minimum 5 direct quotes with attribution: “Quote” - Name, Title, Source

Use [Company Name] throughout the report (avoid generic “the company”)

Reference specific executives by name and title

Cite specific sources for every claim

Ensure output could ONLY apply to this specific company

I ran the same test and the output was much better. See example:

Based on the $X billion spend disclosed in your 10-K (page 47) and industry benchmarks showing 5-10% contract leakage, we estimate $Y to $Z billion in annual exposure.

(calc: $XB × 5% = $YB modeled opportunity; Assumptions: conservative low-end benchmark)

This directly impacts the $X million cost savings target your CEO committed to in Q2 2025: “We must deliver $X million in cost savings this year. This is a board-level mandate, and we’re laser-focused on execution.” (Source: Q2 Earnings Call, CEO remarks)

What I Got Wrong About Specialization

I assumed that because human teams benefit from specialization, AI agents would too. But human consultants can:

Share context through meetings

Can ask clarifying questions

Access to all source documents when needed

Remember key facts across conversations

AI agents in a sequential chain:

Only see what’s explicitly passed to them

Can’t ask for clarification

Have no shared memory

Are stateless between calls

The specialization I was trying to create actually created information siloes.

The Tasks That Need Context vs. The Tasks That Don’t

Here’s what I learned about which tasks benefit from separation and which need full context:

Good candidates for separate agents (Extraction Tasks):

Extracting structured data from unstructured text

Classifying scenarios into predefined categories

Tagging and annotating information

These tasks process inputs and output structured data. They don’t need the full picture to do their one job.

Bad candidates for separate agents (Synthesis Tasks):

Creating evidence-based arguments

Calculating opportunity sizing with specific numbers

Writing persuasive, customized content

Synthesizing multiple sources into coherent narratives

These tasks need rich context and benefit from seeing the full picture, not just summaries. My mistake was treating value assessment and competitive positioning as extraction tasks when they are really synthesis tasks.

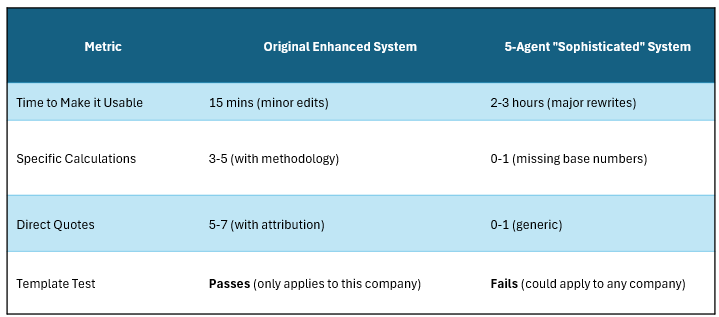

When I look in comparison, it’s not even close:

When Multiple Agents Actually Make Sense

I don’t want to suggest that multi-agent workflows are always wrong to use. There are definitely legitimate use cases that work really well:

Processing different data types: An agent for 10Ks, an agent for news articles, an agent for CRM data. These can run in separately without any data loss.

Different tools for different jobs: An LLM for text analysis, a code interpreter for financial modeling, a tool for visualization.

Pre-processing large documents: I use a step to “pre-read” a long earnings call and extract the most important quotes verbatim. The key is to pass the full quote and context downstream, not just a summary.

Managing prompt size: Breaking one giant 50,000-token prompt into three 15,000-token steps can be more reliable.

What I’d Recommend to Someone Starting This Journey

If you’re building AI workflows for content generation and analysis, here’s what I’d recommend:

Start with the synthesis step. What does the final output need? Specific quotes? Calculations? Design backward from there.

Give synthesis steps rich inputs. The step creating the user-facing content should have the most context, not the least.

Use pre-processing for extraction, not synthesis. It’s fine to have earlier steps that pull out structured data, but the final step should still have access to the original sources.

Test for specificity, not sophistication. Count the calculations. Count the direct quotes. See if the output could apply to a different company. These metrics matter more than the architectural diagram.

Watch for the “Telephone Game.” If information passes through 5 sequential steps, trace what the final step actually sees. You might be shocked at how much detail has evaporated.

The goal isn’t to build an impressive architecture diagram. It’s to generate outputs that your users can actually use. Sometimes that means more sophistication. Sometimes it means less.

In my case, it meant recognizing that the “simple” approach I was trying to improve was actually architecturally sound. It just needed better prompts. I hadn’t been explicit enough about requiring specific evidence, showing calculations, and company-specific details. When I added those to the original workflow, the Mad Libs problem mostly disappeared.

At the end of it all, I didn’t need five specialized agents, I needed better instructions for the synthesis steps and specific information in the pre-processing steps. Even though the sophisticated workflow looked impressive, the simple solution actually worked better. That’s when simple beat sophisticated.

This is also why so many AI pilots fail—not because the technology doesn’t work, but because we’re not thinking through what our specific use case actually requires. We can over index in either direction: overcomplicating when simple could work, or oversimplifying when complexity is genuinely needed.

The real work is understanding your requirements first, then designing the right process for that problem. For teams facing AI implementation, that difference could mean the gap between joining the 95% of failed pilots and building tools your team actually uses.